2025

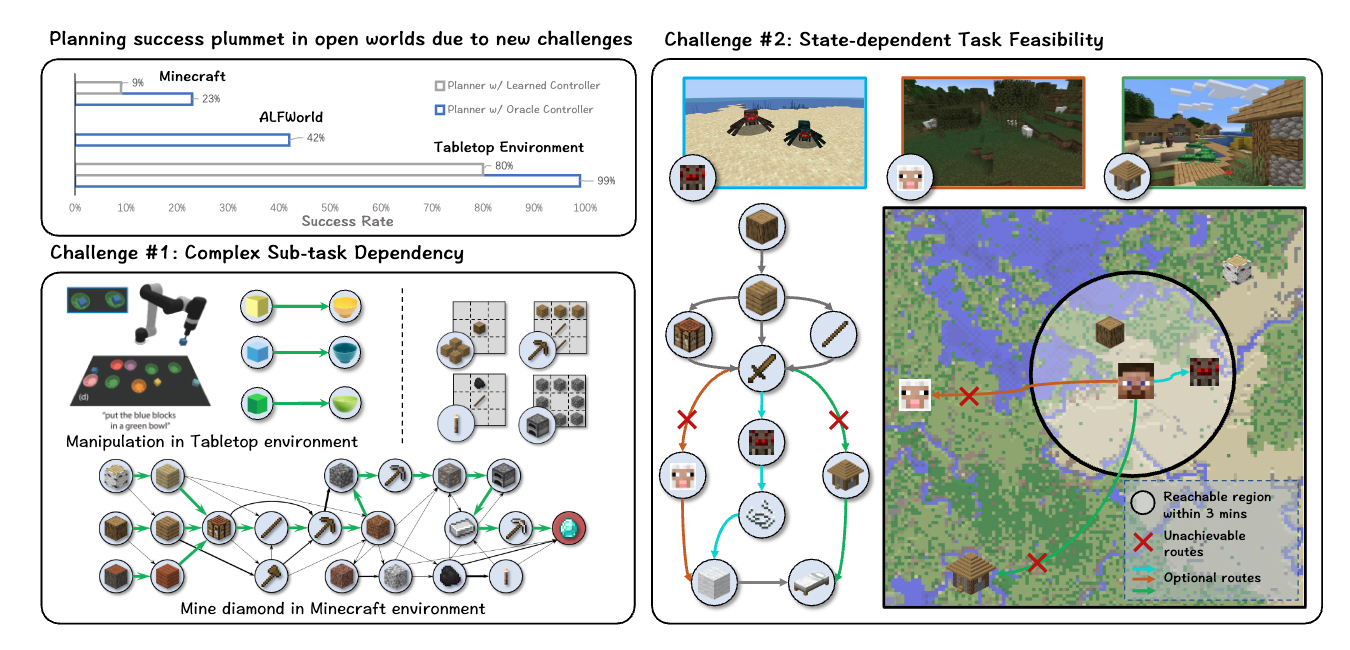

Scalable Multi-Task Reinforcement Learning for Generalizable Spatial Intelligence in Visuomotor Agents

Shaofei Cai*, Zhancun Mu*, Haiwen Xia, Bowei Zhang, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2025

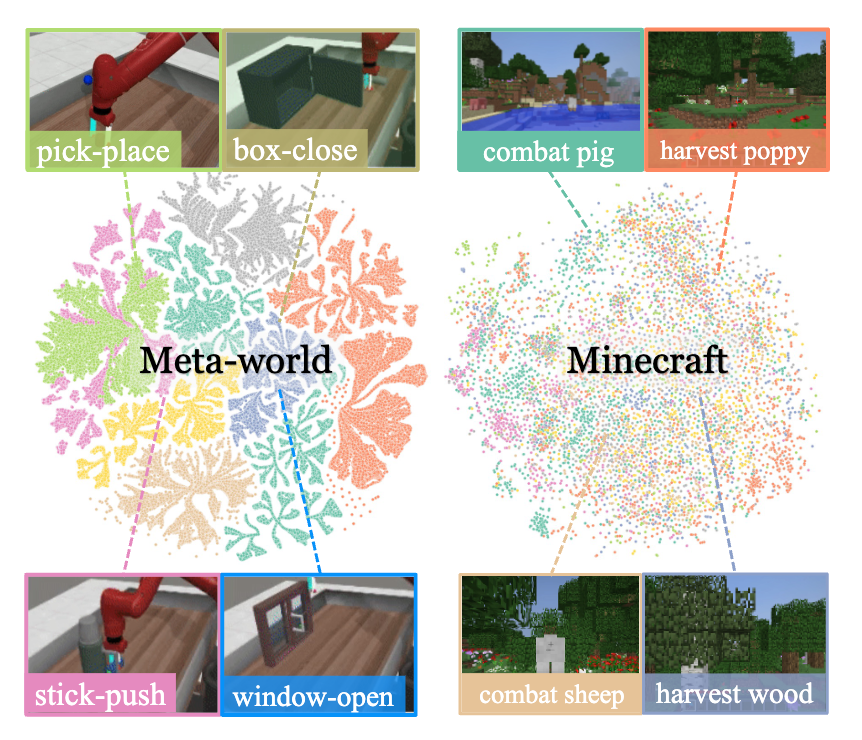

The first reinforcement learning trained multi-task policy in the Minecraft world, demonstrating zero-shot generalization capability to other 3D domains.

Scalable Multi-Task Reinforcement Learning for Generalizable Spatial Intelligence in Visuomotor Agents

Shaofei Cai*, Zhancun Mu*, Haiwen Xia, Bowei Zhang, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2025

The first reinforcement learning trained multi-task policy in the Minecraft world, demonstrating zero-shot generalization capability to other 3D domains.

ROCKET-2: Steering Visuomotor Policy via Cross-View Goal Alignment

Shaofei Cai, Zhancun Mu, Anji Liu, Yitao Liang

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI'26) 2025

We aim to develop a goal specification method that is semantically clear, spatially sensitive, and intuitive for human users to guide agent interactions in embodied environments. Specifically, we propose a novel cross-view goal alignment framework that allows users to specify target objects using segmentation masks from their own camera views rather than the agent's observations.

ROCKET-2: Steering Visuomotor Policy via Cross-View Goal Alignment

Shaofei Cai, Zhancun Mu, Anji Liu, Yitao Liang

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI'26) 2025

We aim to develop a goal specification method that is semantically clear, spatially sensitive, and intuitive for human users to guide agent interactions in embodied environments. Specifically, we propose a novel cross-view goal alignment framework that allows users to specify target objects using segmentation masks from their own camera views rather than the agent's observations.

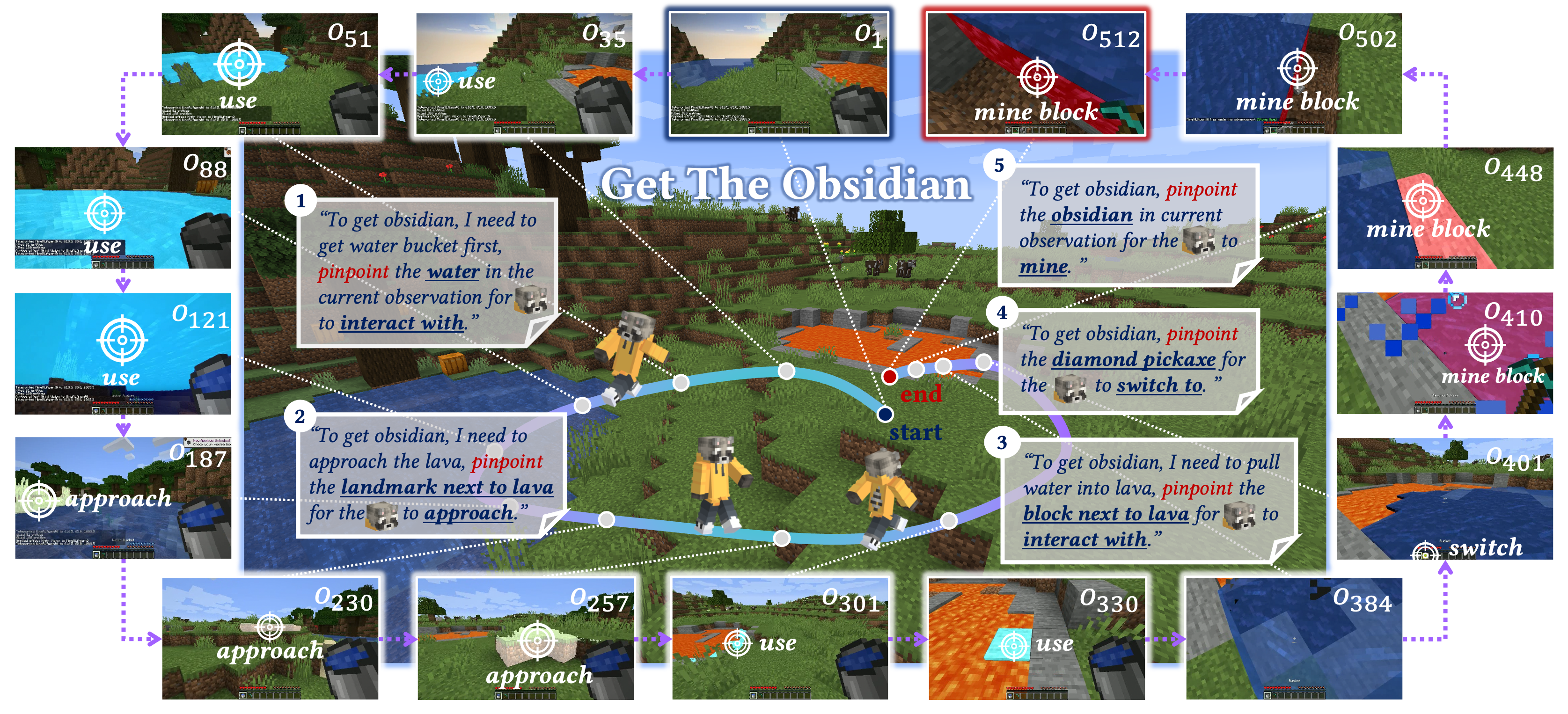

ROCKET-1: Mastering Open-World Interaction with Visual-Temporal Context Prompting

Shaofei Cai, Zihao Wang, Kewei Lian, Zhancun Mu, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'25) 2025

We propose visual-temporal context prompting, a novel communication protocol between VLMs and policy models. This protocol leverages object segmentation from past observations to guide policy-environment interactions. Using this approach, we train ROCKET-1, a low-level policy that predicts actions based on concatenated visual observations and segmentation masks, supported by real-time object tracking from SAM-2.

ROCKET-1: Mastering Open-World Interaction with Visual-Temporal Context Prompting

Shaofei Cai, Zihao Wang, Kewei Lian, Zhancun Mu, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'25) 2025

We propose visual-temporal context prompting, a novel communication protocol between VLMs and policy models. This protocol leverages object segmentation from past observations to guide policy-environment interactions. Using this approach, we train ROCKET-1, a low-level policy that predicts actions based on concatenated visual observations and segmentation masks, supported by real-time object tracking from SAM-2.

2024

MineStudio: A Streamlined Package for Minecraft AI Agent Development

Shaofei Cai*, Zhancun Mu*, Kaichen He, Bowei Zhang, Xinyue Zheng, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2024

MineStudio is an open-source software package designed to streamline embodied policy development in Minecraft. MineStudio represents the first comprehensive integration of seven critical engineering components: simulator, data, model, offline pretraining, online finetuning, inference, and benchmark, thereby allowing users to concentrate their efforts on algorithm innovation.

MineStudio: A Streamlined Package for Minecraft AI Agent Development

Shaofei Cai*, Zhancun Mu*, Kaichen He, Bowei Zhang, Xinyue Zheng, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2024

MineStudio is an open-source software package designed to streamline embodied policy development in Minecraft. MineStudio represents the first comprehensive integration of seven critical engineering components: simulator, data, model, offline pretraining, online finetuning, inference, and benchmark, thereby allowing users to concentrate their efforts on algorithm innovation.

GROOT-2: Weakly Supervised Multi-Modal Instruction Following Agents

Shaofei Cai*, Bowei Zhang*, Zihao Wang, Haowei Lin, Xiaojian Ma, Anji Liu, Yitao Liang (* equal contribution)

International Conference on Learning Representations (ICLR'25) 2025

We frame the problem as a semi-supervised learning task and introduce GROOT-2, a multimodal instructable agent trained using a novel approach that combines weak supervision with latent variable models. Our method consists of two key components: constrained self-imitating, which utilizes large amounts of unlabeled demonstrations to enable the policy to learn diverse behaviors, and human intention alignment, which uses a smaller set of labeled demonstrations to ensure the latent space reflects human intentions.

GROOT-2: Weakly Supervised Multi-Modal Instruction Following Agents

Shaofei Cai*, Bowei Zhang*, Zihao Wang, Haowei Lin, Xiaojian Ma, Anji Liu, Yitao Liang (* equal contribution)

International Conference on Learning Representations (ICLR'25) 2025

We frame the problem as a semi-supervised learning task and introduce GROOT-2, a multimodal instructable agent trained using a novel approach that combines weak supervision with latent variable models. Our method consists of two key components: constrained self-imitating, which utilizes large amounts of unlabeled demonstrations to enable the policy to learn diverse behaviors, and human intention alignment, which uses a smaller set of labeled demonstrations to ensure the latent space reflects human intentions.

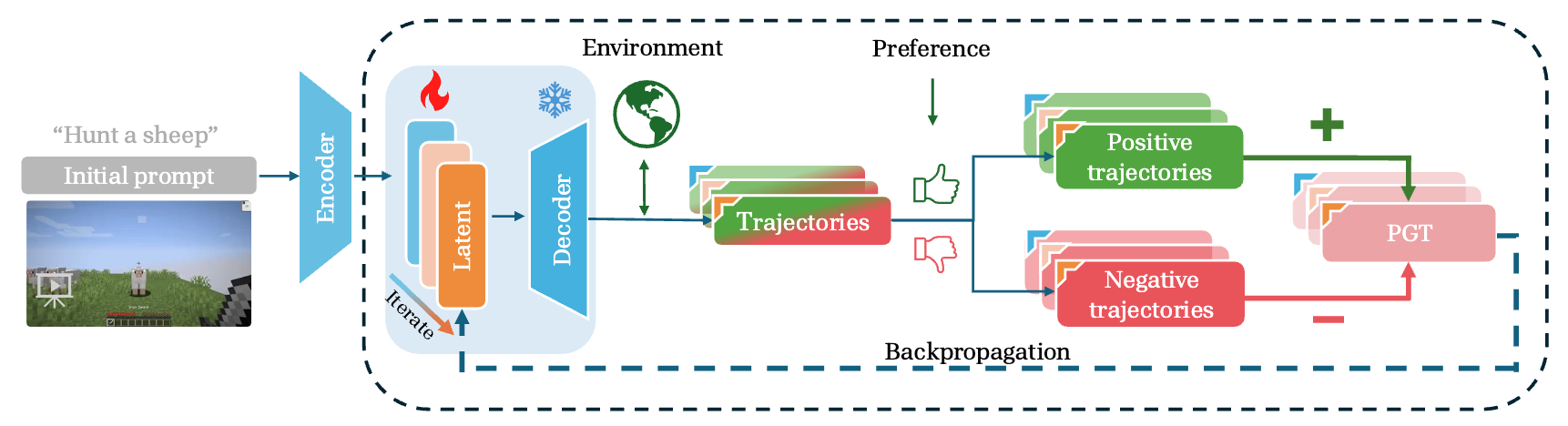

Optimizing Latent Goal by Learning from Trajectory Preference

Guangyu Zhao*, Kewei Lian*, Haowei Lin, Haobo Fu, Qiang Fu, Shaofei Cai, Zihao Wang, Yitao Liang (* equal contribution)

arxiv 2024

This paper proposes a framework named Preference Goal Tuning (PGT). PGT allows an instruction following policy to interact with the environment to collect several trajectories, which will be categorized into positive and negative samples based on preference. We use preference learning to fine-tune the initial goal latent representation with the categorized trajectories while keeping the policy backbone frozen.

Optimizing Latent Goal by Learning from Trajectory Preference

Guangyu Zhao*, Kewei Lian*, Haowei Lin, Haobo Fu, Qiang Fu, Shaofei Cai, Zihao Wang, Yitao Liang (* equal contribution)

arxiv 2024

This paper proposes a framework named Preference Goal Tuning (PGT). PGT allows an instruction following policy to interact with the environment to collect several trajectories, which will be categorized into positive and negative samples based on preference. We use preference learning to fine-tune the initial goal latent representation with the categorized trajectories while keeping the policy backbone frozen.

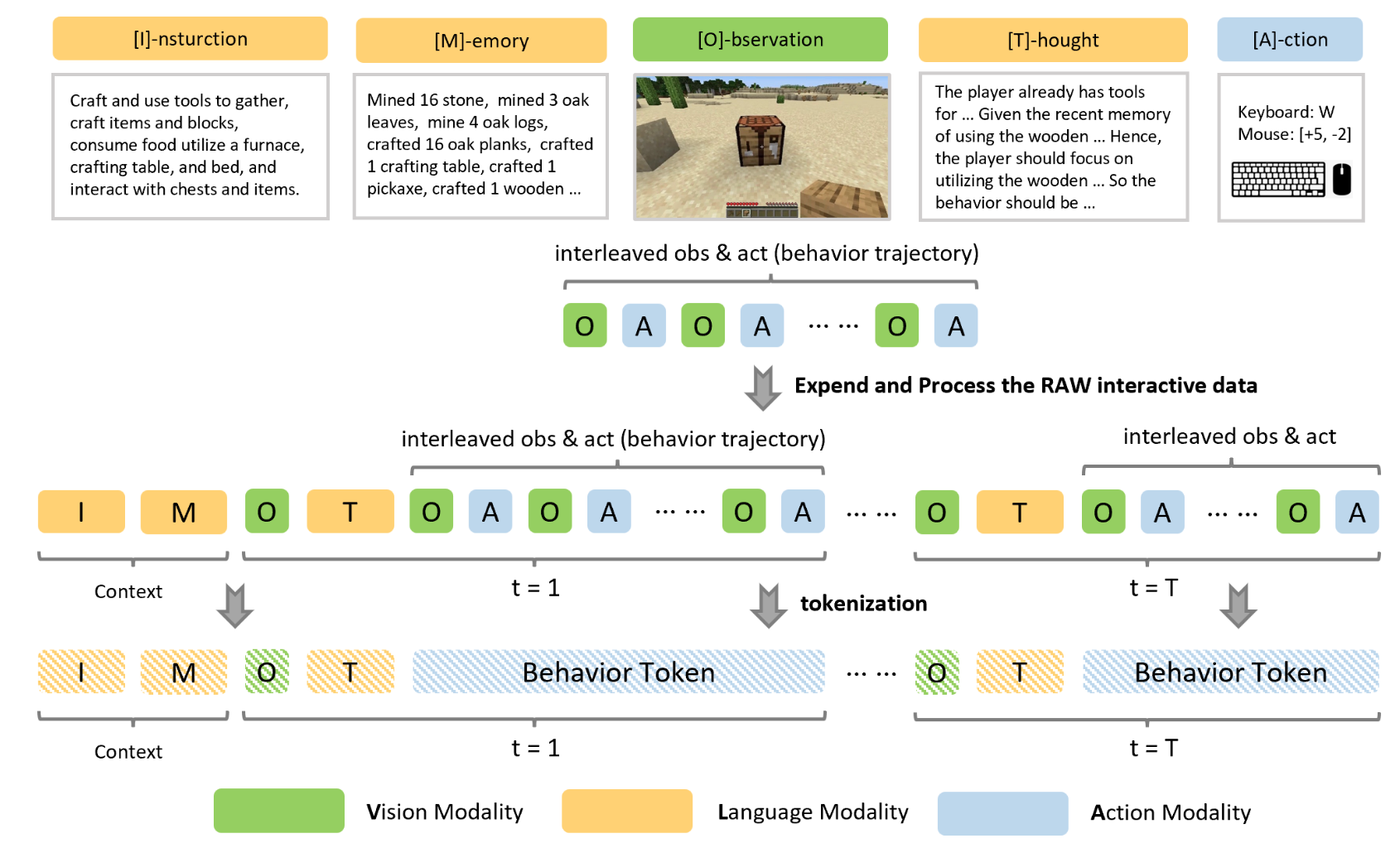

OmniJARVIS: Unified Vision-Language-Action Tokenization Enables Open-World Instruction Following Agents

Zihao Wang, Shaofei Cai, Zhancun Mu, Haowei Lin, Ceyao Zhang, Xuejie Liu, Qing Li, Anji Liu, Xiaojian Ma, Yitao Liang

Neural Information Processing Systems (NeurIPS'24) 2024

This paper presents OmniJARVIS, a novel Vision-Language-Action (VLA) model for open-world instructionfollowing agents in Minecraft. Compared to prior works that either emit textual goals to separate controllers or produce the control command directly, OmniJARVIS seeks a different path to ensure both strong reasoning and efficient decision-making capabilities via unified tokenization of multimodal interaction data.

OmniJARVIS: Unified Vision-Language-Action Tokenization Enables Open-World Instruction Following Agents

Zihao Wang, Shaofei Cai, Zhancun Mu, Haowei Lin, Ceyao Zhang, Xuejie Liu, Qing Li, Anji Liu, Xiaojian Ma, Yitao Liang

Neural Information Processing Systems (NeurIPS'24) 2024

This paper presents OmniJARVIS, a novel Vision-Language-Action (VLA) model for open-world instructionfollowing agents in Minecraft. Compared to prior works that either emit textual goals to separate controllers or produce the control command directly, OmniJARVIS seeks a different path to ensure both strong reasoning and efficient decision-making capabilities via unified tokenization of multimodal interaction data.

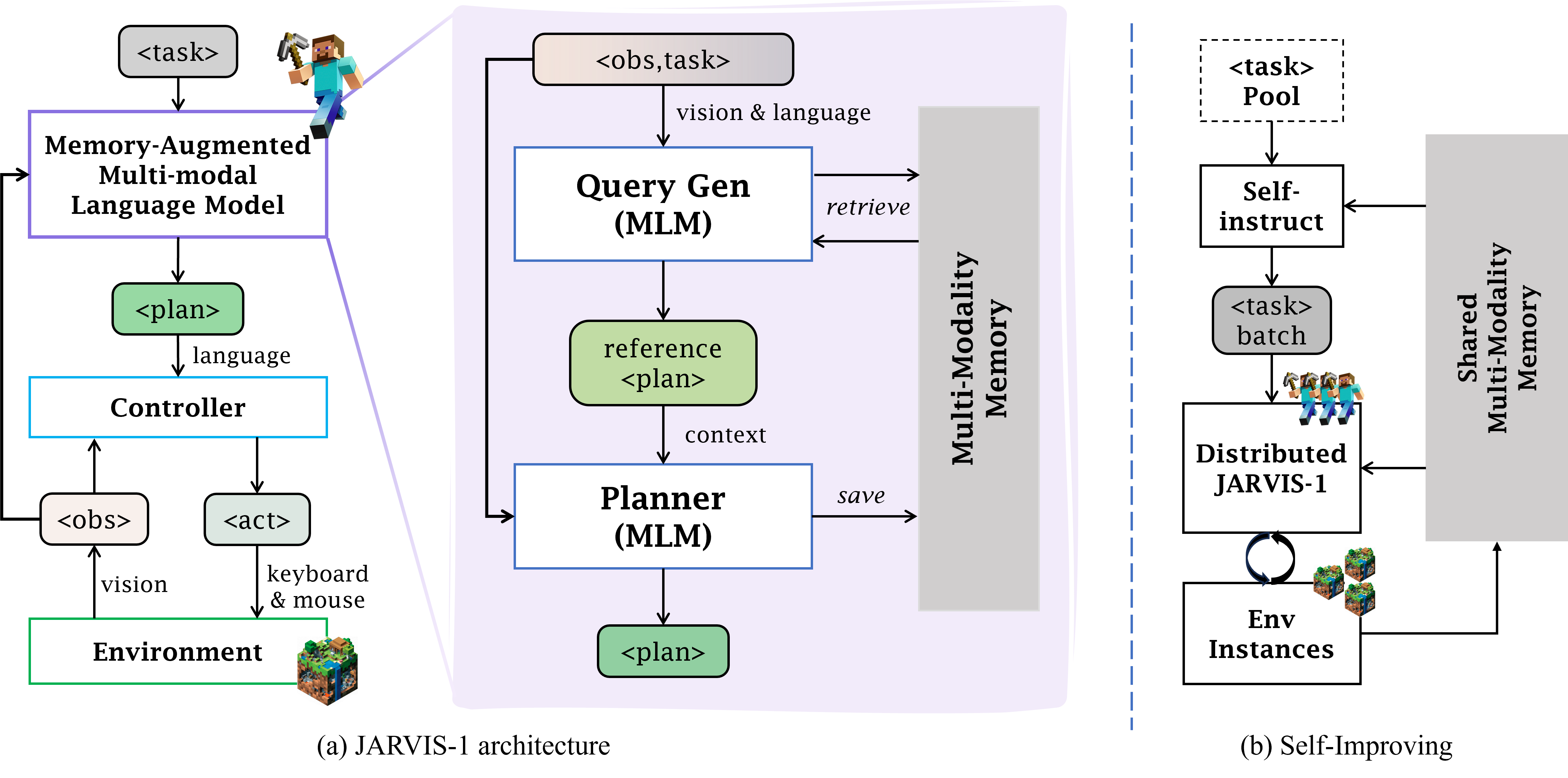

JARVIS-1: Open-World Multi-task Agents with Memory-Augmented Multimodal Language Models

Zihao Wang, Shaofei Cai, Anji Liu, Yonggang Jin, Jinbing Hou, Bowei Zhang, Haowei Lin, Zhaofeng He, Zilong Zheng, Yaodong Yang, Xiaojian Ma, Yitao Liang

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI'24) 2024

This paper introduces JARVIS-1, an open-world agent that can perceive multimodal input (visual observations and human instructions), generate sophisticated plans, and perform embodied control, all within the popular yet challenging open-world Minecraft universe. Specifically, we develop JARVIS-1 on top of pre-trained multimodal language models, which map visual observations and textual instructions to plans. The plans will be ultimately dispatched to the goal-conditioned controllers.

JARVIS-1: Open-World Multi-task Agents with Memory-Augmented Multimodal Language Models

Zihao Wang, Shaofei Cai, Anji Liu, Yonggang Jin, Jinbing Hou, Bowei Zhang, Haowei Lin, Zhaofeng He, Zilong Zheng, Yaodong Yang, Xiaojian Ma, Yitao Liang

IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI'24) 2024

This paper introduces JARVIS-1, an open-world agent that can perceive multimodal input (visual observations and human instructions), generate sophisticated plans, and perform embodied control, all within the popular yet challenging open-world Minecraft universe. Specifically, we develop JARVIS-1 on top of pre-trained multimodal language models, which map visual observations and textual instructions to plans. The plans will be ultimately dispatched to the goal-conditioned controllers.

2023

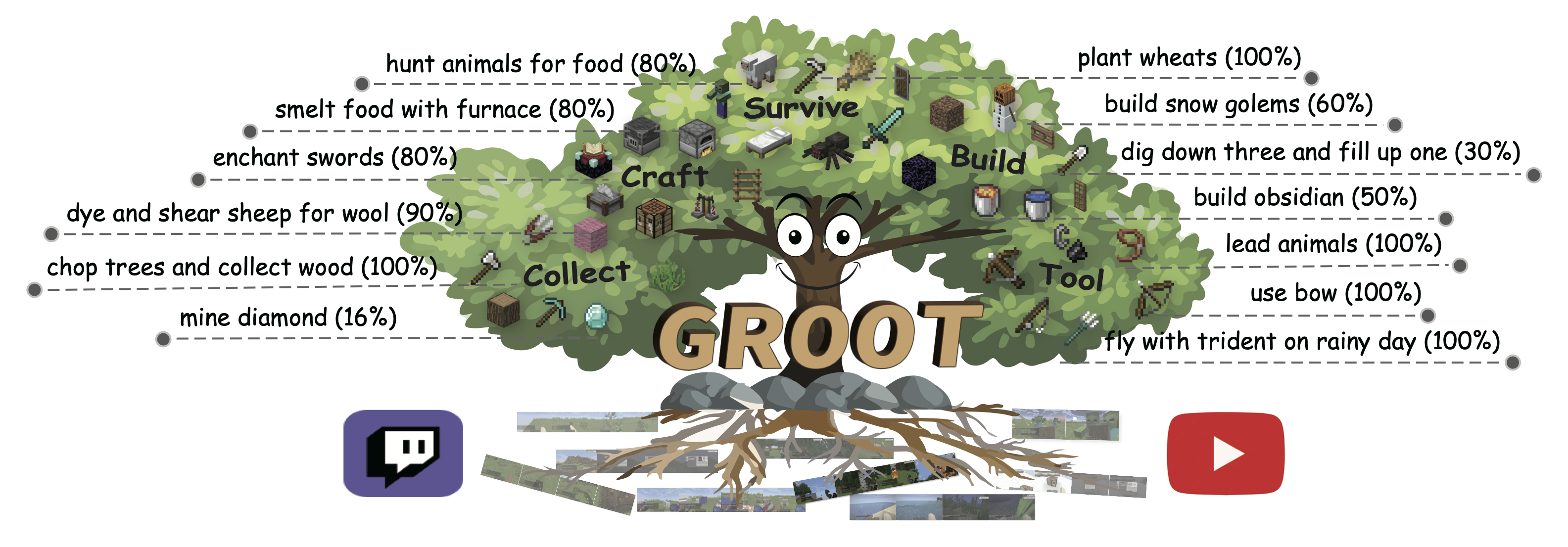

GROOT: Learning to Follow Instructions by Watching Gameplay Videos

Shaofei Cai, Bowei Zhang, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

International Conference on Learning Representations (ICLR'24) 2024 Spotlight Top 6.2%

This paper studies the problem of building a controller that can follow open-ended instructions in open-world environments. We propose to follow reference videos as instructions, which offer expressive goal specifications while eliminating the need for expensive text-gameplay annotations. A new learning framework is derived to allow learning such instruction-following controllers from gameplay videos while producing a video instruction encoder that induces a structured goal space.

GROOT: Learning to Follow Instructions by Watching Gameplay Videos

Shaofei Cai, Bowei Zhang, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

International Conference on Learning Representations (ICLR'24) 2024 Spotlight Top 6.2%

This paper studies the problem of building a controller that can follow open-ended instructions in open-world environments. We propose to follow reference videos as instructions, which offer expressive goal specifications while eliminating the need for expensive text-gameplay annotations. A new learning framework is derived to allow learning such instruction-following controllers from gameplay videos while producing a video instruction encoder that induces a structured goal space.

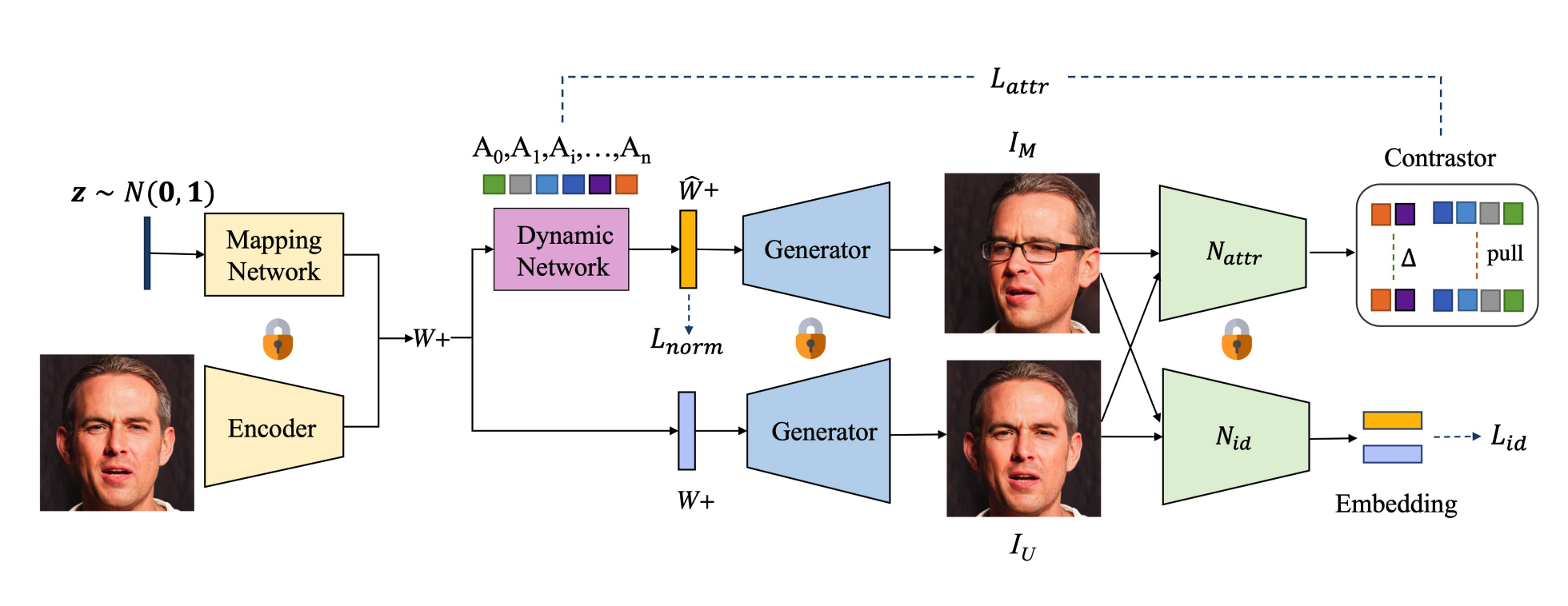

DyStyle: Dynamic Neural Network for Multi-Attribute-Conditioned Style Editing

Bingchuan Li*, Shaofei Cai*, Wei Liu, Peng Zhang, Miao Hua, Qian He, Zili Yi (* equal contribution)

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV'23) 2023

This paper proposes a semantic and correlation disentangled graph convolution method, which builds the image-specific graph and employs graph propagation to reason the labels effectively. We introduce a semantic disentangling module to extract category-wise semantic features as nodes and a correlation disentangling module to extract image-specific label correlations as edges. Performing graph convolutions on this image-specific graph allows for better mining of difficult labels with weak visual representations.

DyStyle: Dynamic Neural Network for Multi-Attribute-Conditioned Style Editing

Bingchuan Li*, Shaofei Cai*, Wei Liu, Peng Zhang, Miao Hua, Qian He, Zili Yi (* equal contribution)

IEEE/CVF Winter Conference on Applications of Computer Vision (WACV'23) 2023

This paper proposes a semantic and correlation disentangled graph convolution method, which builds the image-specific graph and employs graph propagation to reason the labels effectively. We introduce a semantic disentangling module to extract category-wise semantic features as nodes and a correlation disentangling module to extract image-specific label correlations as edges. Performing graph convolutions on this image-specific graph allows for better mining of difficult labels with weak visual representations.

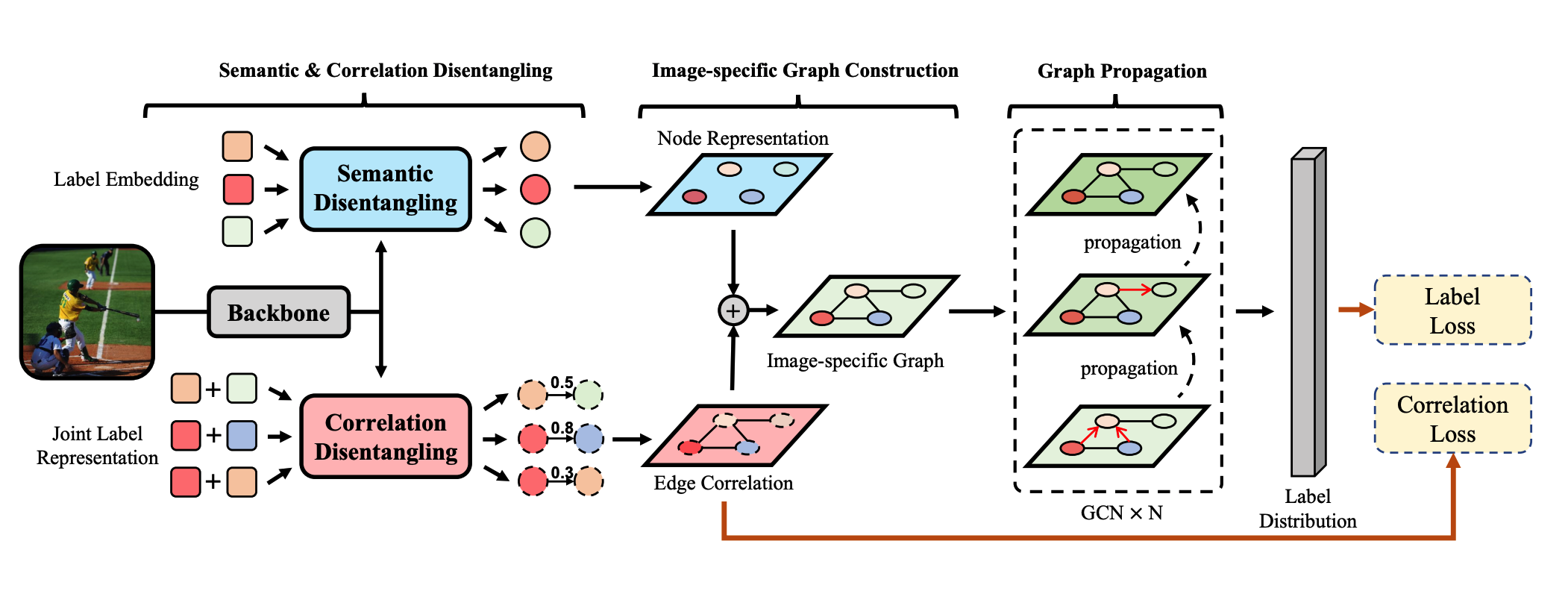

Semantic and Correlation Disentangled Graph Convolutions for Multilabel Image Recognition

Shaofei Cai, Liang Li, Xinzhe Han, Shan Huang, Qi Tian, Qingming Huang

IEEE Transactions on Neural Networks and Learning Systems (TNNLS'23) 2023

This paper proposes a semantic and correlation disentangled graph convolution method, which builds the image-specific graph and employs graph propagation to reason the labels effectively. We introduce a semantic disentangling module to extract category-wise semantic features as nodes and a correlation disentangling module to extract image-specific label correlations as edges. Performing graph convolutions on this image-specific graph allows for better mining of difficult labels with weak visual representations.

Semantic and Correlation Disentangled Graph Convolutions for Multilabel Image Recognition

Shaofei Cai, Liang Li, Xinzhe Han, Shan Huang, Qi Tian, Qingming Huang

IEEE Transactions on Neural Networks and Learning Systems (TNNLS'23) 2023

This paper proposes a semantic and correlation disentangled graph convolution method, which builds the image-specific graph and employs graph propagation to reason the labels effectively. We introduce a semantic disentangling module to extract category-wise semantic features as nodes and a correlation disentangling module to extract image-specific label correlations as edges. Performing graph convolutions on this image-specific graph allows for better mining of difficult labels with weak visual representations.

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Zihao Wang, Shaofei Cai, Xiaojian Ma, Anji Liu, Yitao Liang

Neural Information Processing Systems (NeurIPS'23) 2023

This paper identifies two main challenges of learning such policies: 1) the indistinguishability of tasks from the state distribution, due to the vast scene diversity,and 2) the non-stationary nature of environment dynamics caused by partial observability. For first challenge, we present Goal-Sensitive Backbone (GSB) for the policy to encourage the emergence of goal-relevant visual state representations. For the second challenge, the policy is further fueled by an adaptive horizon prediction module that helps alleviate the learning uncertainty.

Describe, Explain, Plan and Select: Interactive Planning with Large Language Models Enables Open-World Multi-Task Agents

Zihao Wang, Shaofei Cai, Xiaojian Ma, Anji Liu, Yitao Liang

Neural Information Processing Systems (NeurIPS'23) 2023

This paper identifies two main challenges of learning such policies: 1) the indistinguishability of tasks from the state distribution, due to the vast scene diversity,and 2) the non-stationary nature of environment dynamics caused by partial observability. For first challenge, we present Goal-Sensitive Backbone (GSB) for the policy to encourage the emergence of goal-relevant visual state representations. For the second challenge, the policy is further fueled by an adaptive horizon prediction module that helps alleviate the learning uncertainty.

Open-World Multi-Task Control Through Goal-Aware Representation Learning and Adaptive Horizon Prediction

Shaofei Cai, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'23) 2023

This paper identifies two main challenges of learning such policies: 1) the indistinguishability of tasks from the state distribution, due to the vast scene diversity,and 2) the non-stationary nature of environment dynamics caused by partial observability. For first challenge, we present Goal-Sensitive Backbone (GSB) for the policy to encourage the emergence of goal-relevant visual state representations. For the second challenge, the policy is further fueled by an adaptive horizon prediction module that helps alleviate the learning uncertainty.

Open-World Multi-Task Control Through Goal-Aware Representation Learning and Adaptive Horizon Prediction

Shaofei Cai, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'23) 2023

This paper identifies two main challenges of learning such policies: 1) the indistinguishability of tasks from the state distribution, due to the vast scene diversity,and 2) the non-stationary nature of environment dynamics caused by partial observability. For first challenge, we present Goal-Sensitive Backbone (GSB) for the policy to encourage the emergence of goal-relevant visual state representations. For the second challenge, the policy is further fueled by an adaptive horizon prediction module that helps alleviate the learning uncertainty.

2022

Automatic Relation-aware Graph Network Proliferation

Shaofei Cai, Liang Li, Xinzhe Han, Jiebo Luo, Zhengjun Zha, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'22) 2022 Oral Top 4.2%

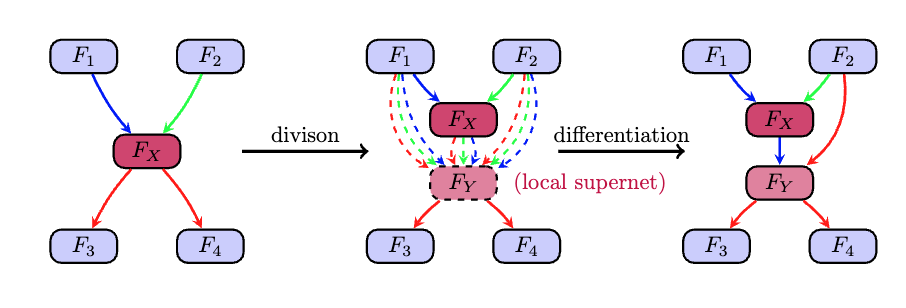

This paper proposes Automatic Relation-aware Graph Network Proliferation (ARGNP) for efficiently searching GNNs with a relation-guided message passing mechanism. Specifically, we first devise a novel dual relation-aware graph search space that comprises both node and relation learning operations. These operations can extract hierarchical node/relational information and provide anisotropic guidance for message passing on a graph. Second, analogous to cell proliferation, we design a network proliferation search paradigm to progressively determine the GNN architectures by iteratively performing network division and differentiation.

Automatic Relation-aware Graph Network Proliferation

Shaofei Cai, Liang Li, Xinzhe Han, Jiebo Luo, Zhengjun Zha, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'22) 2022 Oral Top 4.2%

This paper proposes Automatic Relation-aware Graph Network Proliferation (ARGNP) for efficiently searching GNNs with a relation-guided message passing mechanism. Specifically, we first devise a novel dual relation-aware graph search space that comprises both node and relation learning operations. These operations can extract hierarchical node/relational information and provide anisotropic guidance for message passing on a graph. Second, analogous to cell proliferation, we design a network proliferation search paradigm to progressively determine the GNN architectures by iteratively performing network division and differentiation.

2021

Rethinking Graph Neural Architecture Search from Message-Passing

Shaofei Cai, Liang Li, Jincan Deng, Beichen Zhang, Zhengjun Zha, Li Su, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'21) 2021

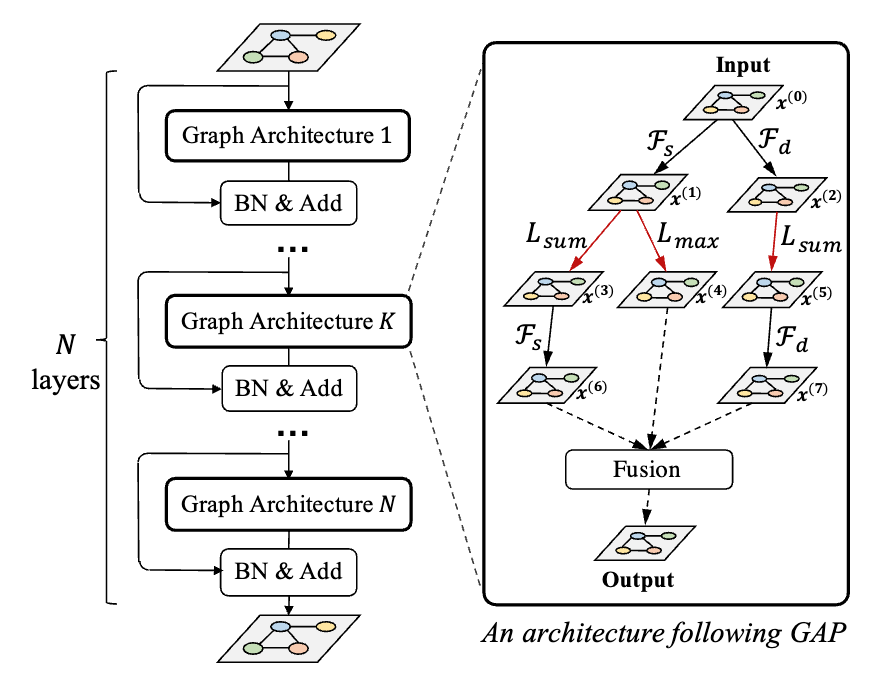

This paper proposes Graph Neural Architecture Search (GNAS) with novel-designed search space. The GNAS can automatically learn better architecture with the optimal depth of message passing on the graph. Specifically, we design Graph Neural Architecture Paradigm (GAP) with tree-topology computation procedure and two types of fine-grained atomic operations (feature filtering & neighbor aggregation) from message-passing mechanism to construct powerful graph network search space.

Rethinking Graph Neural Architecture Search from Message-Passing

Shaofei Cai, Liang Li, Jincan Deng, Beichen Zhang, Zhengjun Zha, Li Su, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'21) 2021

This paper proposes Graph Neural Architecture Search (GNAS) with novel-designed search space. The GNAS can automatically learn better architecture with the optimal depth of message passing on the graph. Specifically, we design Graph Neural Architecture Paradigm (GAP) with tree-topology computation procedure and two types of fine-grained atomic operations (feature filtering & neighbor aggregation) from message-passing mechanism to construct powerful graph network search space.

2020

IR-GAN: Image Manipulation with Linguistic Instruction by Increment Reasoning

Zhenhuan Liu, Jincan Deng, Liang Li, Shaofei Cai, Qianqian Xu, Shuhui Wang, Qingming Huang

ACM International Conference on Multimedia (MM'20) 2020 Oral

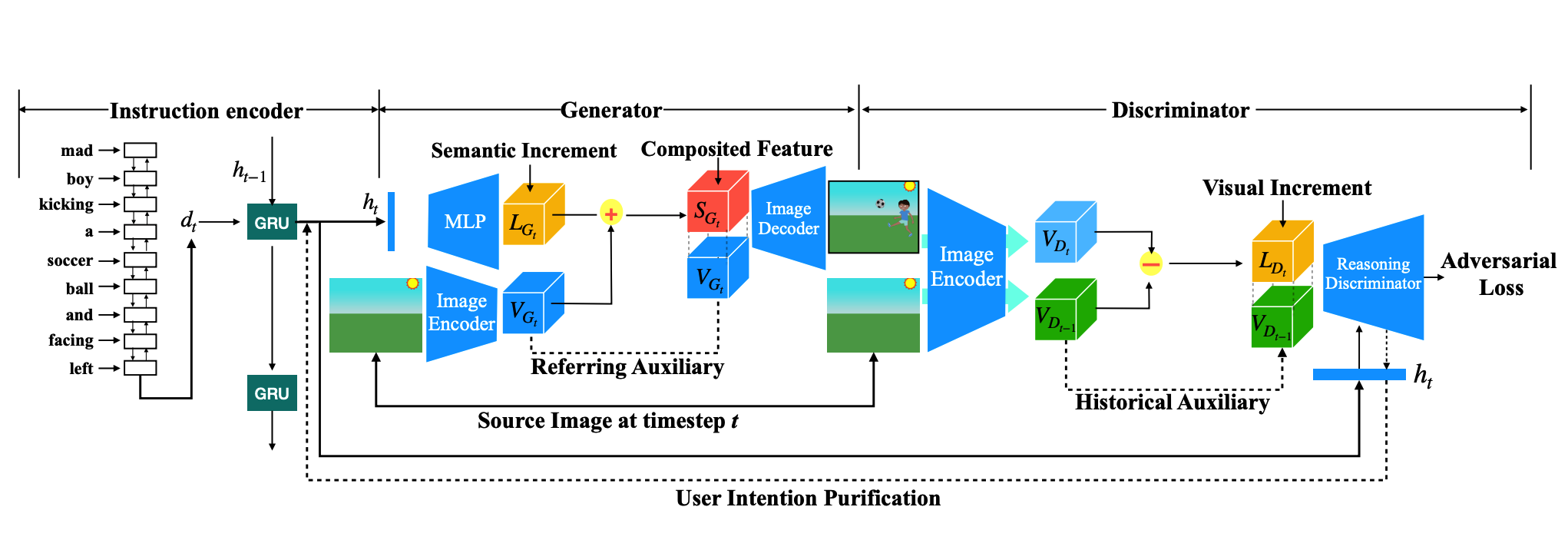

Traditional conditional image generation models mainly focus on generating high-quality and visually realistic images, and lack resolving the partial consistency between image and instruction. To address this issue, we propose an Increment Reasoning Generative Adversarial Network (IR-GAN), which aims to reason the consistency between visual increment in images and semantic increment in instructions.

IR-GAN: Image Manipulation with Linguistic Instruction by Increment Reasoning

Zhenhuan Liu, Jincan Deng, Liang Li, Shaofei Cai, Qianqian Xu, Shuhui Wang, Qingming Huang

ACM International Conference on Multimedia (MM'20) 2020 Oral

Traditional conditional image generation models mainly focus on generating high-quality and visually realistic images, and lack resolving the partial consistency between image and instruction. To address this issue, we propose an Increment Reasoning Generative Adversarial Network (IR-GAN), which aims to reason the consistency between visual increment in images and semantic increment in instructions.