AGI Researcher at DeepSeek AI

AGI Researcher at DeepSeek AI Ph.D. Candidate at Peking University

Ph.D. Candidate at Peking UniversityMy dream is to witness the emergence of AGI and to contribute my efforts.

My doctoral research centered on multi-task agents operating within open-world settings. A core aspect of this work involved identifying and developing a task representation method characterized by high expressiveness, low ambiguity, and scalability for efficient training. In the realm of 3D video games, I spearheaded the development of the GROOT and ROCKET series as the first author. These projects empower AI agents to execute intricate tasks in Minecraft based on human instructions, consistently pushing the frontiers of AI agent capabilities within the Minecraft environment. Concurrently, I've delved into applying reinforcement learning techniques to bolster the visual reasoning abilities of visuomotor agents. I'm particularly enthusiastic about creating intelligent agents that can perceive and retain information with the fluidity and coherence akin to human cognition. Furthermore, I actively track the progress in general agents, holding a strong belief that the scalable generation and validation of tasks within digital worlds is currently the most viable route to achieving AGI.

Research Interests: Machine Learning, Computer Vision, Reinforcement Learning, Embodied Intelligence, LLM Agents

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Peking UniversityInstitute for Artificial Intelligence

Peking UniversityInstitute for Artificial Intelligence

Ph.D. CandidateSep. 2022 - Jul. 2026 -

University of Chinese Academy of SciencesInstitute of Computing Technology, Chinese Academy of Sciences

University of Chinese Academy of SciencesInstitute of Computing Technology, Chinese Academy of Sciences

M.S. in Computer ScienceSep. 2019 - Jul. 2022 -

Xi'an Jiaotong UniversitySoftware College

Xi'an Jiaotong UniversitySoftware College

B.Eng. in Software EngineeringSep. 2015 - Jul. 2019

Experience

-

DeekSeek AIAGI ResearcherOct. 2025 - now

DeekSeek AIAGI ResearcherOct. 2025 - now

Honors & Awards

-

Gold Medal 🏅 Top 3.3%

at International Collegiate Programming Contest (ACM-ICPC) Asia Regional2018 -

Outstanding Student Award

at Xi’an Jiaotong University for undergraduate students2019 -

3rd Academic Star Award (Top 5 Students) at Peking University2025

-

Winner, 1st at ATEC 2025 Robotics and AI Challenge, Software Track2025

News

Selected Publications (view all )

Scalable Multi-Task Reinforcement Learning for Generalizable Spatial Intelligence in Visuomotor Agents

Shaofei Cai*, Zhancun Mu*, Haiwen Xia, Bowei Zhang, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2025

The first reinforcement learning trained multi-task policy in the Minecraft world, demonstrating zero-shot generalization capability to other 3D domains.

Scalable Multi-Task Reinforcement Learning for Generalizable Spatial Intelligence in Visuomotor Agents

Shaofei Cai*, Zhancun Mu*, Haiwen Xia, Bowei Zhang, Anji Liu, Yitao Liang (* equal contribution)

arxiv 2025

The first reinforcement learning trained multi-task policy in the Minecraft world, demonstrating zero-shot generalization capability to other 3D domains.

ROCKET-2: Steering Visuomotor Policy via Cross-View Goal Alignment

Shaofei Cai, Zhancun Mu, Anji Liu, Yitao Liang

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI'26) 2025

We aim to develop a goal specification method that is semantically clear, spatially sensitive, and intuitive for human users to guide agent interactions in embodied environments. Specifically, we propose a novel cross-view goal alignment framework that allows users to specify target objects using segmentation masks from their own camera views rather than the agent's observations.

ROCKET-2: Steering Visuomotor Policy via Cross-View Goal Alignment

Shaofei Cai, Zhancun Mu, Anji Liu, Yitao Liang

Proceedings of the AAAI Conference on Artificial Intelligence (AAAI'26) 2025

We aim to develop a goal specification method that is semantically clear, spatially sensitive, and intuitive for human users to guide agent interactions in embodied environments. Specifically, we propose a novel cross-view goal alignment framework that allows users to specify target objects using segmentation masks from their own camera views rather than the agent's observations.

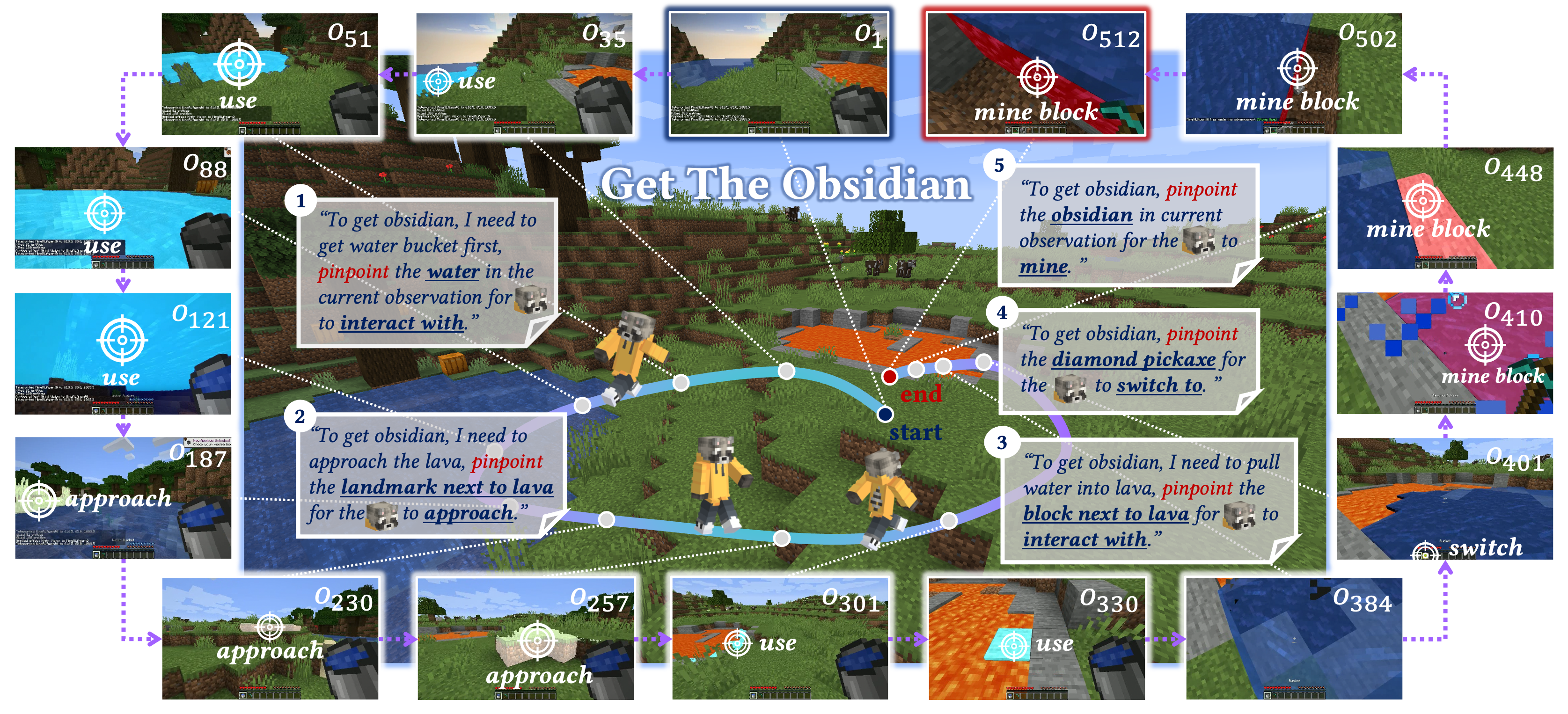

ROCKET-1: Mastering Open-World Interaction with Visual-Temporal Context Prompting

Shaofei Cai, Zihao Wang, Kewei Lian, Zhancun Mu, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'25) 2025

We propose visual-temporal context prompting, a novel communication protocol between VLMs and policy models. This protocol leverages object segmentation from past observations to guide policy-environment interactions. Using this approach, we train ROCKET-1, a low-level policy that predicts actions based on concatenated visual observations and segmentation masks, supported by real-time object tracking from SAM-2.

ROCKET-1: Mastering Open-World Interaction with Visual-Temporal Context Prompting

Shaofei Cai, Zihao Wang, Kewei Lian, Zhancun Mu, Xiaojian Ma, Anji Liu, Yitao Liang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'25) 2025

We propose visual-temporal context prompting, a novel communication protocol between VLMs and policy models. This protocol leverages object segmentation from past observations to guide policy-environment interactions. Using this approach, we train ROCKET-1, a low-level policy that predicts actions based on concatenated visual observations and segmentation masks, supported by real-time object tracking from SAM-2.

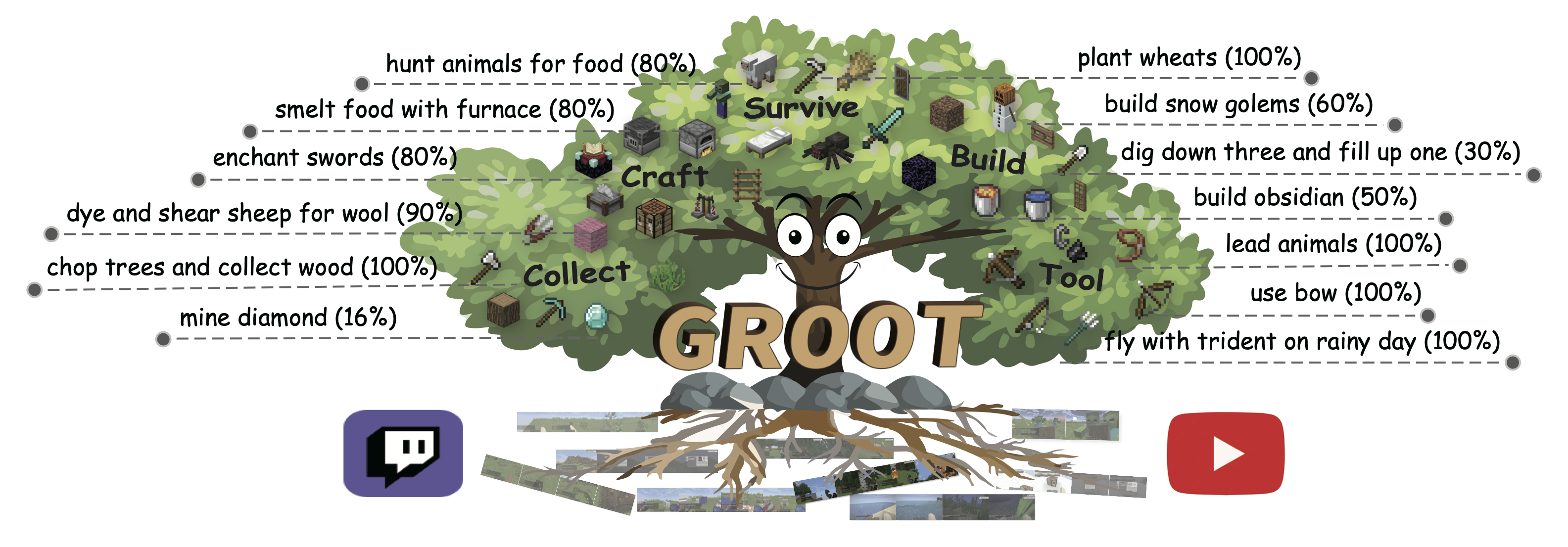

GROOT: Learning to Follow Instructions by Watching Gameplay Videos

Shaofei Cai, Bowei Zhang, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

International Conference on Learning Representations (ICLR'24) 2024 Spotlight Top 6.2%

This paper studies the problem of building a controller that can follow open-ended instructions in open-world environments. We propose to follow reference videos as instructions, which offer expressive goal specifications while eliminating the need for expensive text-gameplay annotations. A new learning framework is derived to allow learning such instruction-following controllers from gameplay videos while producing a video instruction encoder that induces a structured goal space.

GROOT: Learning to Follow Instructions by Watching Gameplay Videos

Shaofei Cai, Bowei Zhang, Zihao Wang, Xiaojian Ma, Anji Liu, Yitao Liang

International Conference on Learning Representations (ICLR'24) 2024 Spotlight Top 6.2%

This paper studies the problem of building a controller that can follow open-ended instructions in open-world environments. We propose to follow reference videos as instructions, which offer expressive goal specifications while eliminating the need for expensive text-gameplay annotations. A new learning framework is derived to allow learning such instruction-following controllers from gameplay videos while producing a video instruction encoder that induces a structured goal space.

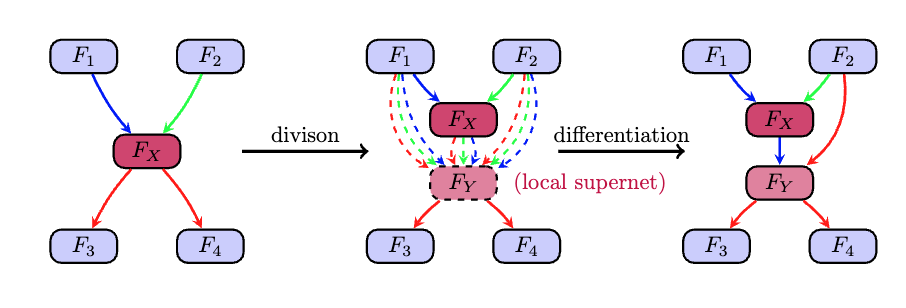

Automatic Relation-aware Graph Network Proliferation

Shaofei Cai, Liang Li, Xinzhe Han, Jiebo Luo, Zhengjun Zha, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'22) 2022 Oral Top 4.2%

This paper proposes Automatic Relation-aware Graph Network Proliferation (ARGNP) for efficiently searching GNNs with a relation-guided message passing mechanism. Specifically, we first devise a novel dual relation-aware graph search space that comprises both node and relation learning operations. These operations can extract hierarchical node/relational information and provide anisotropic guidance for message passing on a graph. Second, analogous to cell proliferation, we design a network proliferation search paradigm to progressively determine the GNN architectures by iteratively performing network division and differentiation.

Automatic Relation-aware Graph Network Proliferation

Shaofei Cai, Liang Li, Xinzhe Han, Jiebo Luo, Zhengjun Zha, Qingming Huang

IEEE/CVF Computer Vision and Pattern Recognition (CVPR'22) 2022 Oral Top 4.2%

This paper proposes Automatic Relation-aware Graph Network Proliferation (ARGNP) for efficiently searching GNNs with a relation-guided message passing mechanism. Specifically, we first devise a novel dual relation-aware graph search space that comprises both node and relation learning operations. These operations can extract hierarchical node/relational information and provide anisotropic guidance for message passing on a graph. Second, analogous to cell proliferation, we design a network proliferation search paradigm to progressively determine the GNN architectures by iteratively performing network division and differentiation.